New facial analysis models recognize eight states of expressions from the people in a video, plot trends of positivity and negativity, and add support for a broader set of face positions.

OULU, FINLAND – A major upgrade was rolled out today for Valossa’s unique emotional analytics recognition model which produces much more elaborate data of the behavior of people in videos.

One of the most important updates is the ability to interpret facial expressions and emotions from other than frontal poses, which makes it possible to detect states of emotions and track sentiment change between positivity and negativity for all faces in the video.

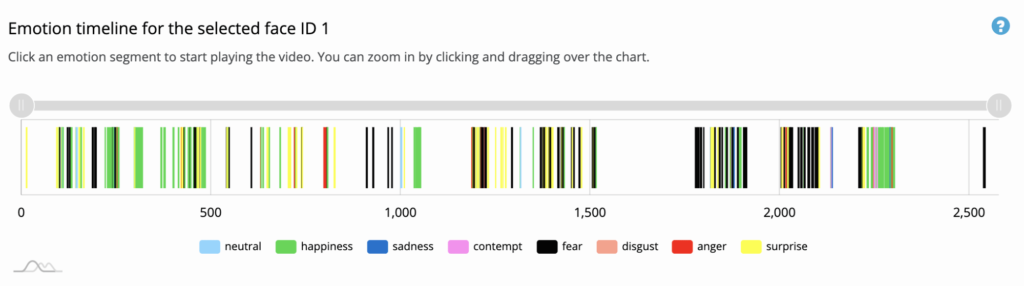

The updated model allows inspection of emotional timeline in the Valossa Portal, which contains convenient graphical tools to find the emotional highlights throughout the video, and export trend and statistics information as a CSV data output. Data is also available via REST API for system developers.

The new emotion analysis models are a significant addition to our broad video analysis offering for video data, says CEO Mika Rautiainen, Our customers are using facial analysis to understand people’s behavior in consumer research videos, find the sentiment of potential influencers for product marketing purposes, analyze reactions of people with their products, and investigate behavioral changes while interacting with other people and environments.

Most interestingly, emotions analytics are also being used to create new experiences in live streams, as was demonstrated with YLE in June, when Valossa AI acted as a game show judge in a live comedy contest. The event with live AI analysis was streamed via nation-wide YLE Areena service. Emotion analysis is also one of the cornerstones for a new AI-generated video highlights solution: AI helps media companies to produce short clips for marketing promotions and dynamic VoD services.

One of the clear benefits of using Valossa AI for emotion and expression analysis is that Valossa AI can also extract more contextual understanding to support interpretation of emotion output, explains Mika Rautiainen, In video content, speech and visuals contain cues that contribute to the narrative of the scene. Our analysis engine is able to interpret scenes where an actor is smiling while shooting with a gun. This scene, despite the narrow interpretation of positivity, may not be suitable for a daytime promotion of a film, and will be automatically left out from such highlights reels by our AI interpretation. Another example is about analysing influencer videos; if a video host is laughing continuously while still using derogatory terms in the speech, he/she is not a good candidate to promote a brand despite the positive face expression. There are plenty of examples like this in video analytics space over various use cases.

Got interested? It is easy to get started with the new engine. Request a demo account to our Portal and elaborate your use case for emotional analysis of videos. After gaining access to the account, use easy drag-and-drop interfaces to start testing our analytics tools with your videos.

Find out more about Valossa AI solutions

For any questions contact us at sales@valossa.com