Introducing Valossa Moderator™ – AI for sensitive content moderation. Gain deep content insights to identify inappropriate and harmful content. Protect your viewers and advertisers with GARM framework.

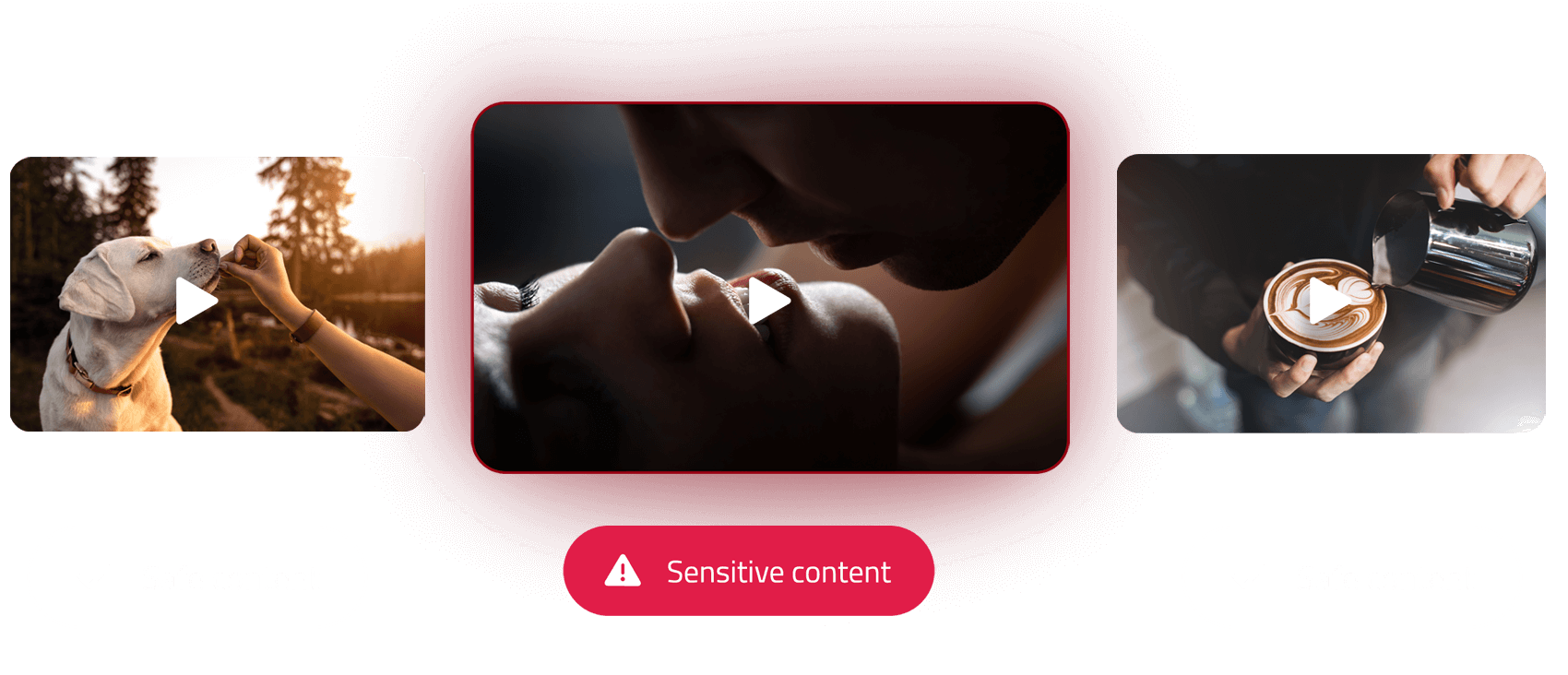

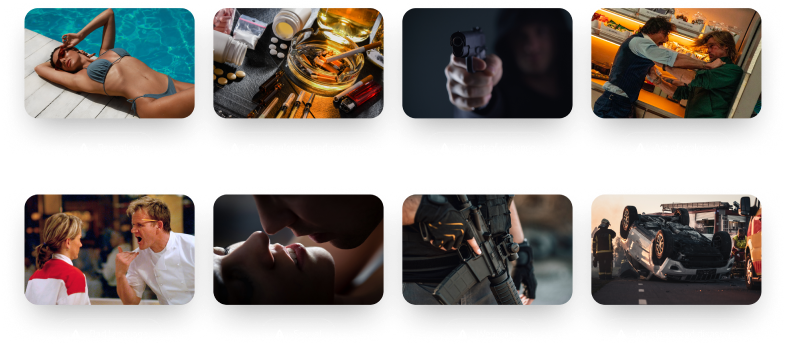

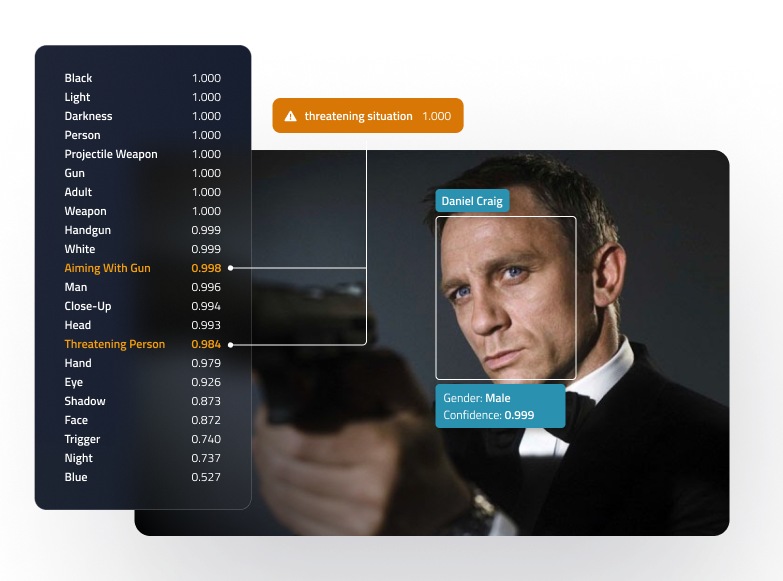

Valossa AI sees and hears what’s inside videos to detect explicit content, revealing, violence, injuries and threats, accidents and disasters, substance use, guns and weapons. It also understands spoken bad language, as well as sensitive content topics like social issues, crime, death, hateful language and more.

Provides comprehensive two-tiered analysis of your content within sensitive categories on sensual, sexual, violence, violent outcomes, substance use, accidents and disasters, and bad language. Valossa offers a cognitive service that recognizes nuanced variations between the extreme and milder forms of content.

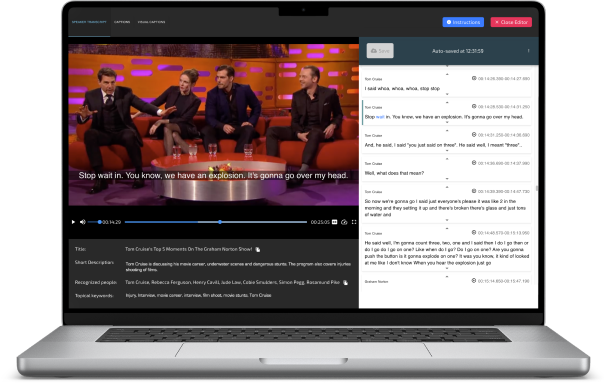

Integrate deep analysis for sensitive content in your applications through extensive and elaborate Valossa Metadata containing all relevant analysis descriptions.

Allow moderation teams to scale effectively when working with sensitive content across video platforms. Valossa Reports provide interactive compliance report summaries for instant inspection of results. Teams become more productive reducing review completion times over linear watching.